How We Switched to a Continuous Delivery Pipeline in 3 months

First Month

First Month

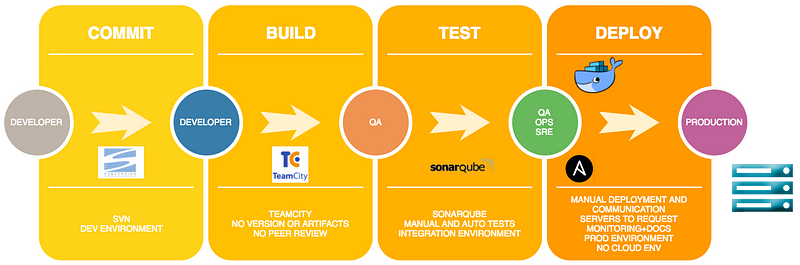

Background

Our deployment strategy was very old and manual due to several reasons:

- Deployment was manual (build, publish/promote, release and deploy)

- Functional and technical tests were manual

- The release pipeline took long times to be pushed to production

- Often defect and rollback for a release

- High coupling between Infrastructure & dev teams & QA

- A lot of release specificities, hard to maintain and operate

- Have a server was too long and require each time specifications

- No release management was in place

- No artifact was created and packaged

- The repository was on Subversion with multiple branch principles

In order to avoid defect deployment, ease releases and post-tests and be more close to the market, we have decided to turn our current pipeline to a CI/CDs

Organization

We organize the project by the Agile methodology. We have begun by a “kickoff” page under confluence to initiate the project. A formal kick-off meeting must be set up if at least one of the following conditions is met:

- 3+ developers are going to work on the project for at least 2 sprints,

- The project has an impact on shared resources or on the performance of a web application,

- The rollout or rollback of the project does not follow the usual process,

- The project adds a new item to the company stack,

- The project involves the creation of a new service, application or tool,

- The project requires additional hardwares or resources,

- The project will be hosted on machines with a new kind of configuration,

- The project adds or modifies cookies, or modifies URLs,

- …

Once we had our first clean draft, we chose a name for the project, in our case it was Jaune Doe (John Doe is everywhere). We also sent it to the R&D team for a review. This step took 2 weeks.

We got the go-ahead from our fellow stakeholders during the kickoff meeting then we began working on the technical part. We collaborated on the migration to Git (one of the biggest parts of this project). We adopted a “one-branch organisation”.

In order to be fully dedicated to the project, we organized a virtual team of 3 engineers (also called vteam): One PM/Lead, one SRE and one QA engineer.

The project organization was simple and we’ve been continuously asking and replying to these questions:

- What needs to be done this week ? A follow-up every Monday for the weekly “menu”

- What’s keeping you from doing your tasks ? A follow-up every Thursday to identify the pain points that keeps us from doing our job

- What did we accomplish since the last week ? A demo meeting every Friday morning to show our progress

Acting as a project manager, I made the choice of using mind mapping (e.g coggle.it) to share ideas and a to-do list. Our mindmap was separated into 3 main branches: Build, Promote and Deploy. At the end, each final branch was represented by a Jira ticket and a Kanban board with all the to-do actions.

We ended up having around 50 tickets:

Goals

The aim of our new deployment methodology is:

- Deploying an IIS application

- Deploying a Docker application

- Deploying Windows services

- All deployments should use artifacts, same for SQL with DacPac

- Deploying infrastructure services (configuration, servers, pools)

- Deployment could be done in the Cloud or Baremetal

- Each release/deployment should be tracked

- Deployment should be fully automated

- You write it, you own it: Developers should deploy their own applications

- Development should be test-driven

- Delivering every change to production as soon after it happens

- Separation of config from code

- Reducing our Time to Market

Non Goals

- We will not manage the code pipeline, we will only focus on how infrastructure can make continuous integration smoother.

- We will not propose the hosting for stateless services.

Design

The idea is to streamline a release through the different stages from the build to production with automation, which will also require the completion of those steps:

- Service isolation

- Agnostic environment

- Horizontal Scaling

- Orchestration

- Automatic monitoring

- A release has a ticket

- Rolling Update

- Test suite provided to run at every step of the deployment

- Able to deploy on Cloud and Baremetal servers

We would use this kind of pipeline:

- Check out code and build

- Unit testing and quality control

- Deploy to test environment

- Fetch latest builds

- Packaging

- Integration testing

- Deploy to production

- Production testing

Release definition

A release is for us one or more deployable artifact and all other results of the pipeline

- deployable one or more artifacts,

- manifesto (README, INSTALL, VERSION, CHANGELOG),

- logs,

- debug artifacts,

- runtime/buildtime dependencies and their versions.

For Msbuild, artifacts are detected using a custom property.

Build definition

Build branch (no concurrent builds & partial build)

Build is scheduled as soon as possible with a quiet period. If no baseline is found, a complete build is triggered:

- For each known repositories, get hash of branch/HEAD of all repositories (master must exist and default)

- For each modified repositories, clone repository for given branches

- Scan projects dependencies and alter dependencies graph (from baseline)

- Clone missing repositories to build from bottom to top

- Generate a artefact with all cloned repositories

- Build the artefact

- Run unit tests on the artefact

- Publish & push artifacts for projects

- Unit test report is stored in TeamCity

- Build log is stored in TeamCity

- Update latest baseline for branch if everything is OK

Build on PR (ephemeral & concurrent)

Build is scheduled immediately. If no baseline found, the build fails.

- Get latest baseline for branch

- Clone repository and apply patch

- Scan projects dependencies and alter dependencies graph (from baseline)

- Clone missing repositories to build from bottom to top

- Generate the artefact with all cloned repositories

- Build the artefact

- Run unit tests on the artefact

- Unit test report is stored in TemCity

- Build log is stored in TeamCity

Bootstrap repository

A bootstrap build repository will be defined and will contain:

- Build scripts

- TeamCity build definition

- Repositories directory

Any modifications in this repository will trigger a build.

Version definition

- Deploy a release means which artifact, which version, what deployment order, what mandatory compatibility

- SRE has a full view of version in production and also a daily reporting

- The version package is the same from DEV to PROD. A configuration file is used to declare an environment

- Tested version means at each stage (development, integration, production) we have a test (system and applicative/functional)

Deployment Workflow

Every day, here is what happens:

- A job takes a snapshot of latest artifacts and dacpac files then deploy to a Sandbox

- The code is tested (E2E during 30 minutes max) in a Sandbox for integration

- If the tests pass, the code is published by creating a baseline of all build artifacts and storing them in a repository like quay.io for Docker

- A deployment happens when a developer decides to take a release and put it on production servers by using a ticket

- The code is deployed progressively in the day on the production servers (10/50/100%)

- At the end of deployment, business and technical metrics are measured by instant metric after deployment (10–15min)

The deployment to production is progressive (10/50/100%) in order to stop the deployment whenever we detect any incident before the full release. The progressive deployment also enables a worldwide release instead of releasing to one datacenter first, then the other ones.

Deployment rules

It is not possible to reliably release all the applications at the same time.

- Every deployment must be done within local business hours and current time restriction must be respected (10:00 AM — 04:00 PM local time)

- The production deployment can be stopped at any time by production team

- No limit to deploy one or more release in the day. It depends on the health of the production platform

- No concurrent deployment.

- Ensure rollback can be done at anytime

- Ensure deployment is tracked

- No deployment on Friday, on Weekend or Bank Holidays

- All deployments have a # version

- Collect team features, communicate, check everything is OK before proceeding

Stack for Core Functions

- Ansible-Vault for managing secrets

- Kubernetes for managing, organizing, and scheduling containers. We will use it to manage resources and containers deployment

- Docker container runtime. Ansible will be the Swiss knife of the platform, it will deploy all the configuration triggered by event that we want ( example: New Docker image, Heavy load in on premise platform)

- Ansible for provisioning and standard server operations. We use it for legacy deployment

- Etcd for a built in framework service discovery. Etcd will be our key configuration host, will manage keys and specific need

- Prometheus added withPRTG for the monitoring and alerting

- SCM with Git and Bitbucket

- Our CI use TeamCity

- Ansible-Vault allows keeping sensitive data such as passwords or keys in encrypted files, rather than as plaintext in your playbooks or roles.

Stack for Other Functions

- Jira for tracking

- Slack for collaboration+communication

- Grafana for dashboard

- Portainer UI for Docker

- Dashboard UI for Kubernetes

Second Month

All development, configuration and installation has been done during this month and also the arrival of the new servers.

Hardware and Software installation

- 12 servers Dell R430, 2,2GHz, 32 and 64Go RAM, 200Go SSD.

- 3 servers per DC

- All Software are Open source

Legacy deployment

We use Ansible to handle and manage legacy applications:ansible-playbook -i windows windows_services.yml -e "env=dev app=appbot url=http://xxxx.server.com/repository/download/AppBots_Trunk_Dev_Smartbot_BuildAndDeploy/.lastSuccessful/AppBot.zip release="

- $env → development or production environment

- $app → generic app name, must be equal to the executable

- $url → path to the artifact

- $release → release version

Legacy deployment is done by Ansible following a trigger from TeamCity.

Container deployment

Docker deployment is done by Kubernetes following a trigger from TeamCity.

Configuration deployment

A shared repository was created and file populated to handle applicative deployment.

Manifest creation

We have created a manifesto to ensure the collaboration between teams. Our manifest looks like this:

Deployment recipe and artefact organisation

The artifact contains:

- APP folder which contains binaries and application files. The folder is copied to the targeted host

APP/ --> Deployed under C:\smart\Release\{{ app }}\{{ app }}-{{ env }}-{{ release }}\App or /App (Linux)

- CONFIG folder which contains the infra configuration set in which references are in following format “{{ http_user_password }}”. It’s transformed using placeholders and manifesto file.

CONFIG/ ---> Deployed under C:\smart\Release\{{ app }}\{{ app }}-{{ env }}-{{ release }}\config | /Config/app_name-env.(json|config|xml)

- APP.yml file which contains specifications and set value for Kubernetes orchestration or Ansible

App.yml ---> Deployed under Kubernetes sets (deployment), docker running command, IIS specific configuration

Third Month

The last month was dedicated to finish all tasks like tracking on a confluence page, implement rules or use Selenium for applicative tests, add notification into Slack and perform the progressive deployment with one application and a canary environment.

Conclusion

This project was very useful for the company and permit to ease releasing, time to market is improved. We were on time, on early time. We handle new application with Kubernetes and also all legacy deployment (IIS and Windows Service) prior to Ansible. We switching from a mono branche to multiple, migrating our SCM to Git and built an artifact for each release with a version number.

Join our community Slack and read our weekly Faun topics ⬇